I think each of us has wondered at least once over the past year if (or rather when) ChatGPT will be able to replace your role. I’m no exception here.

We have a somewhat consensus that the recent breakthroughs in Generative AI will highly affect our personal lives and work. However, there is no clear view yet of how our roles will change over time.

Spending lots of time thinking about different possible future scenarios and their probabilities might be captivating, but I suggest an absolutely different approach — to try to build your prototype yourself. First, it’s rather challenging and fun. Second, it will help us to look at our work in a more structured way. Third, it will give us an opportunity to try in practice one of the most cutting-edge approaches — LLM agents.

In this article, we will start simple and learn how LLMs can leverage tools and do straightforward tasks. But in the following articles, we will dive deeper into different approaches and best practices for LLM agents.

So, let the journey begin.

What is data analytics?

Before moving on to the LLMs, let’s try defining what analytics is and what tasks we do as analysts.

My motto is that the goal of the analytical team is to help the product teams make the right decisions based on data in the available time. It’s a good mission, but to define the scope of the LLM-powered analyst, we should decompose the analytical work further.

I like the framework proposed by Gartner. It identifies four different Data and Analytics techniques:

- Descriptive analytics answers questions like “What happened?”. For example, what was the revenue in December? This approach includes reporting tasks and working with BI tools.

- Diagnostic analytics goes a bit further and asks questions like “Why did something happen?”. For example, why revenue decreased by 10% compared to the previous year? This technique requires more drill-down and slicing & dicing of your data.

- Predictive analytics allows us to get answers to questions like “What will happen?”. The two cornerstones of this approach are forecasting (predicting the future for business-as-usual situations) and simulation (modelling different possible outcomes).

- Prescriptive analytics impacts the final decisions. The common questions are “What should we focus on?” or “How could we increase volume by 10%?”.

Usually, companies go through all these stages step by step. It’s almost impossible to start looking at forecasts and different scenario analyses if your company hasn’t mastered descriptive analytics yet (you don’t have a data warehouse, BI tools, or metrics definitions). So, this framework can also show the company’s data maturity.

Similarly, when an analyst grows from junior to senior level, she will likely go through all these stages, starting from well-defined reporting tasks and progressing to vague strategic questions. So, this framework is relevant on an individual level as well.

If we return to our LLM-powered analyst, we should focus on descriptive analytics and reporting tasks. It’s better to start from the basics. So, we will focus on learning LLM to understand the basic questions about data.

We’ve defined our focus for the first prototype. So, we are ready to move on to the technical questions and discuss the concept of LLM agents and tools.

LLM agents and tools

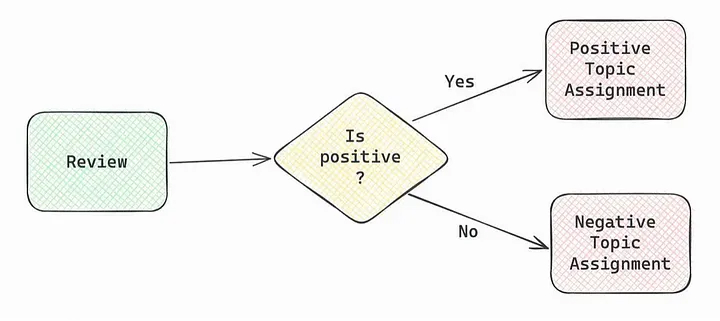

When we were using LLMs before (for example, to do topic modelling here), we described the exact steps ourselves in the code. For example, let’s look at the chain below. Firstly, we asked the model to determine the sentiment for a customer review. Then, depending on the sentiment, extract from the review either the advantages or disadvantages mentioned in the text.

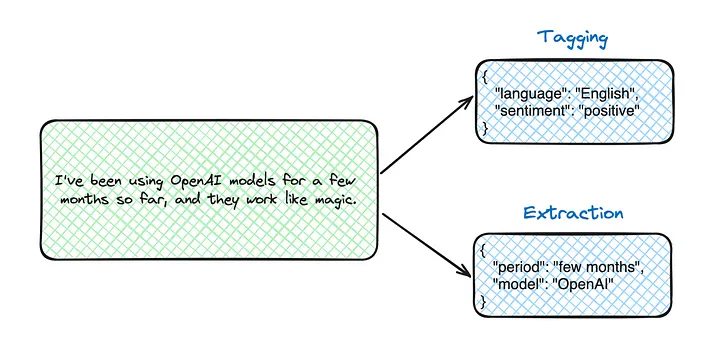

Use Case #1: Tagging & Extraction

You might wonder what is the difference between tagging and extraction. These terms are pretty close. The only difference is whether the model extracts info presented in the text or labels the text providing new information (i.e. defines language or sentiment).

Summary

This article taught us how to empower LLMs with external tools using OpenAI functions. We’ve examined two use cases: extraction to get structured output and routing to use external information for questions. The final result inspires me since LLM could answer pretty complex questions using three different tools.

Let’s return to the initial question of whether LLMs can replace data analysts. Our current prototype is basic and far from the junior analysts’ capabilities, but it’s only the beginning. Stay tuned! We will dive deeper into the different approaches to LLM agents. Next time, we will try to create an agent that can access the database and answer basic questions.